(Image source: youtube.com)

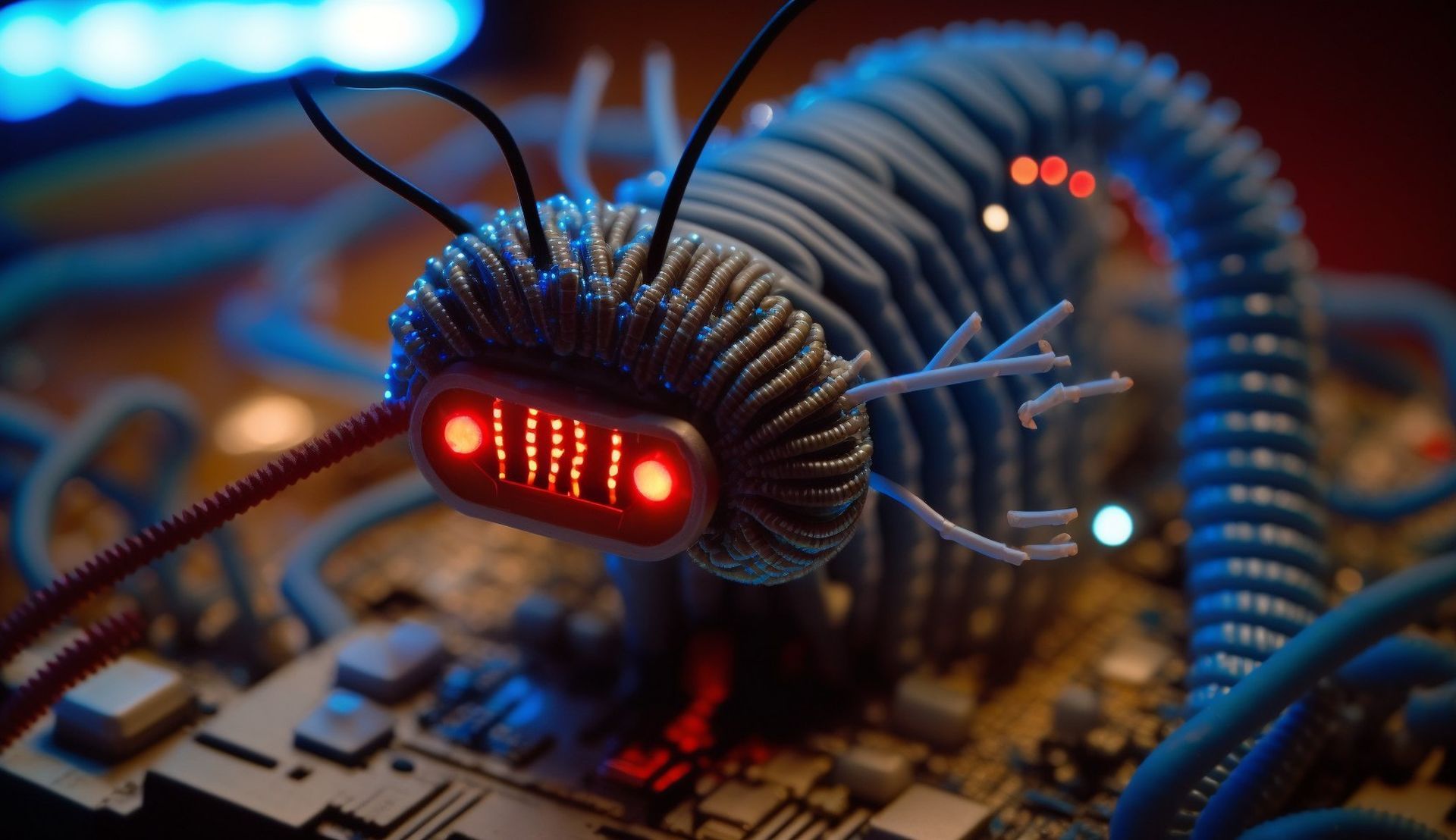

The digital world is expanding quickly, and generative AI—ChatGPT, Gemini, Copilot, and so forth—is presently leading this progress. A vast network of AI-powered platforms surrounds us and provides answers to the majority of our issues. Do you need a diet strategy? You receive a personalized one. Have trouble writing codes? Well, AI will present the complete manuscript to you in real-time. But this increasing reliance on and expansion of the AI ecosystem is also home to fresh dangers that have the capacity to seriously hurt you. The emergence of AI worms, which can steal your private information and breach the security measures put in place by generative AI systems, is one such concern.

Researchers from Cornell University, Technion-Israel Institute of Technology, and Intuit have developed a new kind of malware known as “Morris II,” or what they refer to as the first generative AI worm, which can propagate itself between multiple systems and steal data, according to an article published in Wired. Morris II, so named after the 1988 internet worm that was the first to be released, has the ability to take advantage of security flaws in well-known AI models like ChatGPT and Gemini. According to researcher Ben Nassi of Cornell Tech, “It basically means that now you have the ability to conduct or to perform a new kind of cyberattack that hasn’t been seen before.”

Researchers are concerned that this new type of malware could be used to steal data or send spam emails to millions of people through AI assistants, even though the development and study surrounding this new AI malware was done in a controlled environment and no such malware has been seen in the real world yet. The researchers alert developers and IT businesses to this probable security risk and advise them to take immediate action.

How does an AI worm operate?

Indeed, this Morris II can be thought of as a cunning computer virus. Its role is to interfere with artificial intelligence (AI)-powered email assistants.

Morris II begins by employing a cunning tactic known as “adversarial self-replication.” Through persistent message forwarding, it overloads the email system and causes it to circle back on itself. As a result, the email assistant’s AI models become unstable. Eventually, they get to view and alter data. Information theft or the propagation of malicious software (such as malware) may result from this.

Morris II has two methods to get in, according to researchers: Text-Based: By concealing malicious prompts within emails, it manages to trick the assistant’s security. Image-Based: To further aid in the worm’s transmission, it combines pictures with covert commands. To put it plainly, Morris II is a cunning computer worm that deceives email systems’ artificial intelligence (AI) by employing cunning techniques.

Following an AI deception, what happens?

Morris’s successful infiltration of AI assistants not only violates the AI assistants’ security regulations, but it also places user privacy at risk. The malware is able to retrieve private data from emails, such as social security numbers, phone numbers, credit card numbers, and names, by taking advantage of generative AI’s capabilities.

Here are some tips to help you keep secure.

In particular, it’s crucial to remember that this AI worm is still a novel idea and hasn’t been seen in the wild as yet. But, as AI systems grow more integrated and capable of acting on our behalf, experts think it’s a possible security issue that businesses and developers should be mindful of.

The following are some strategies to prevent AI worms:

Safe design: When creating AI systems, developers should keep security in mind. They should follow established security procedures and refrain from putting their complete faith in the results of AI models.

Human oversight: One way to reduce the hazards is to include people in the decision-making process and make sure AI systems can’t operate without permission.

Observation: Keeping an eye out for anomalous behavior, such as repetitive prompts, can aid in the identification of any security breaches in AI systems.

https://youtu.be/4NZc0rH9gco?si=rwPaGeWdAFxmqH_5